Hello all!

Some people might already know me, especially if you are already using Papagayo-NG.

Konstantin offered me to use this platform to write about Papagayo-NG.

So in this post I will try to give you an overview of what happened with Papagayo-NG in the recent past and a sneak peak of what is planned for the future.

Quick Overview of Papagayo-NG

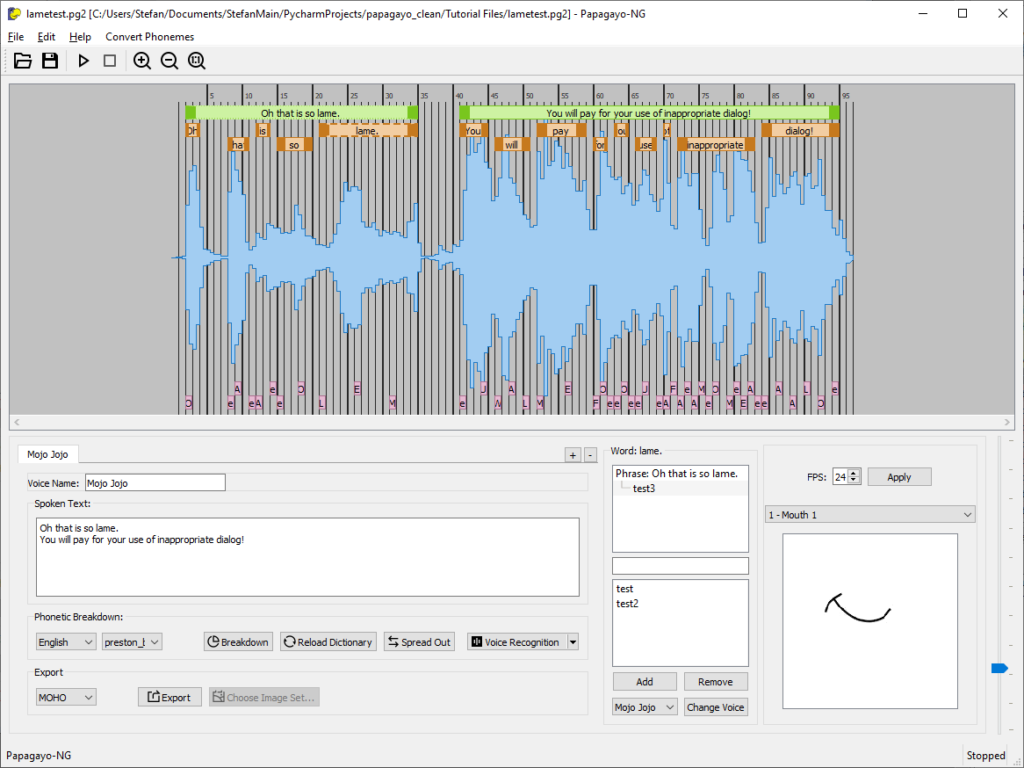

For those of you who don’t know Papagayo-NG yet, it is a tool which helps you to create Lipsync for your Projects, be it Animations, Games or anything else.

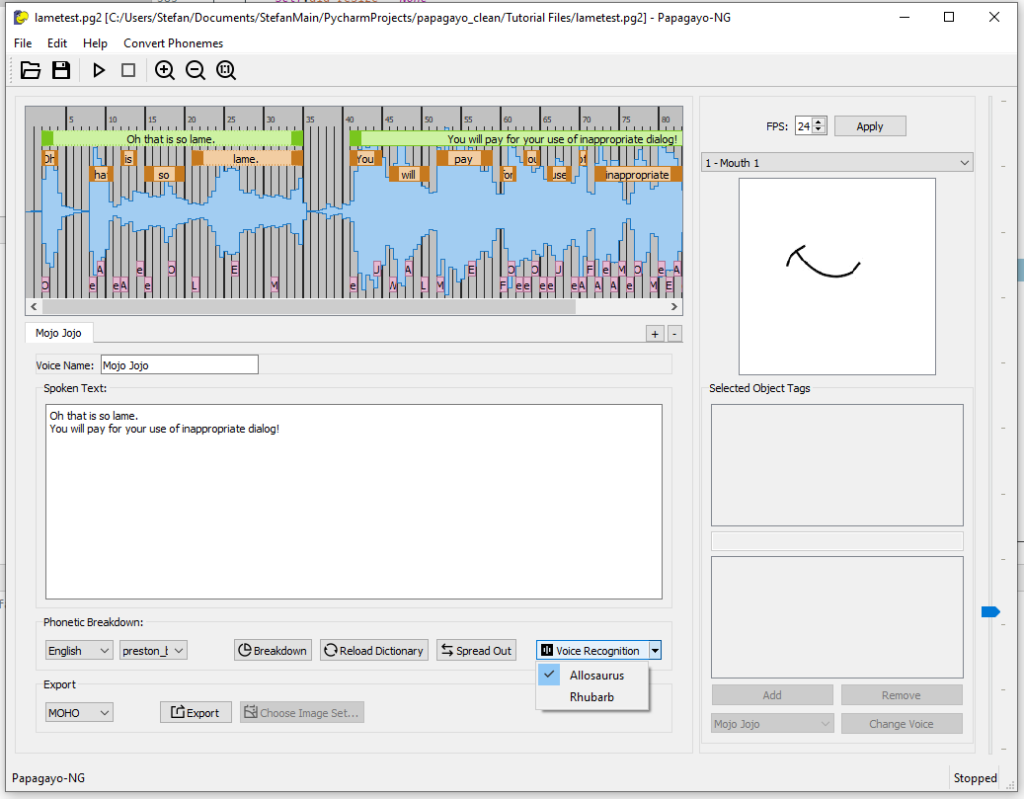

Currently it looks kinda like this:

So, the usual way to work with this is kinda like this:

- Open a sound file you want to transcribe

- Type or Copy/Paste the spoken text for that sound

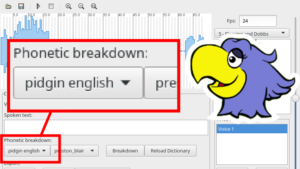

- Select the phoneme set you want and the language and FPS

- Click on Breakdown

- Align the Phrases, Words and Phonemes to fit to the sound

- Save and Export to your desired format

What has changed?

For some time now we had added some experimental support for automatic Phoneme Recognition via Rhubarb.

That works, not very well, but it can be enough for many cases.

So with the advent of Machine Learning and AI many projects working in this general domain appeared.

One such Project is called Allosaurus by Xinjian Li, which uses Machine Learning to recognize phonemes from an audio file directly with a pretty good accuracy in my tests.

Here is an example I made during my first tests:

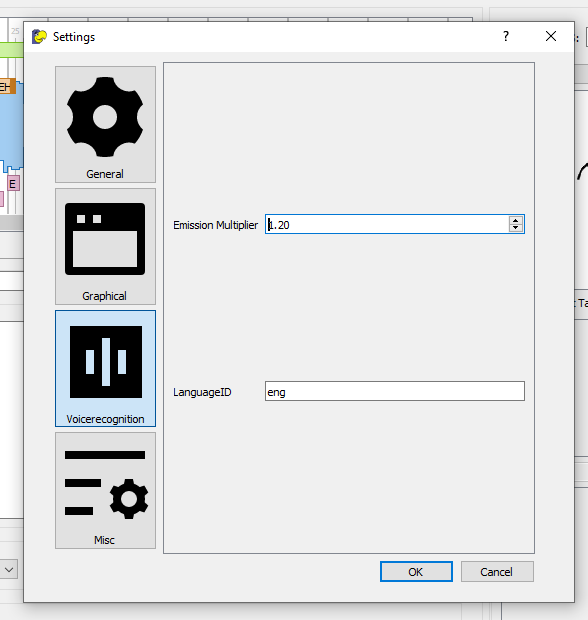

As you can see the results are working pretty well, Allosaurus allows for some customizing, some of which is accessible in our new Settings Dialog.

So after this I wanted to add an option to move selected phrases/words or phonemes between different voices.

Because Allosaurus does not know the difference between different speakers, so everything belongs to a single voice.

I previously added a Tree System with Nodes for those objects to better manager these for our Waveformview.

So, I restructured a lot of the code to use this Node System instead.

Before this we had different classes for voices, phrases, words and phonemes each, I created a new “LipSyncObject” class which can be either of those.

This is a subclass of the NodeMixin from Anytree, this way our LipSyncObjects themselves are are parts of the tree.

In theory moving a phrase from one voice to another should be doable by simply changing its .parent to the desired voice object.

A lot of logic handling has been made much easier, before it was even more intermixed inside the MovableButton Objects for the Waveformview.

A smaller change I added was the possibility to change some styling and colors.

The new settings menu now allows to change the colors of the objects in the Waveformview.

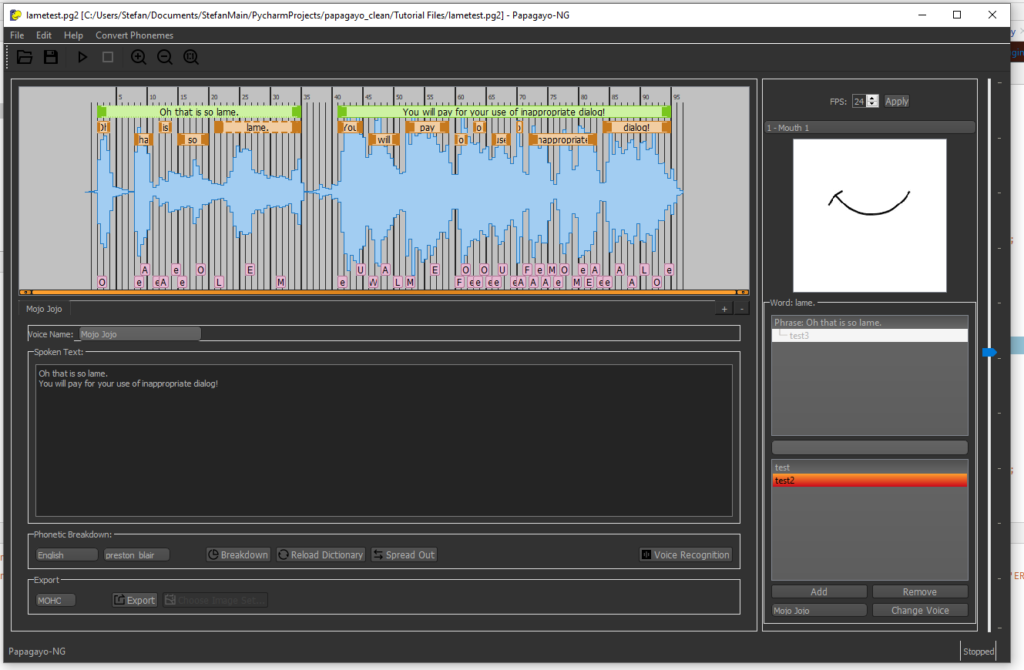

And as you can see you can also select a QSS Stylesheet which will change the appearance of the whole program.

For example here is how Papagayo-NG looks with the style “Obit” from the QSS Stock Webpage:

Other Improvements which happened are for example:

- A new JSON based Project Format and a JSON Based Export format.

These should make it easier for programmers to use this in their own applications,

feel free to contact us if you need help or have suggestions for this. - A new About Dialog which removes the dependency on the built-in Webengine of QT.

- A new improved installer script and a new NSIS based Installer/Uninstaller.

- A new Icon set, as you’ve seen in the screenshots here, we are now using new icons from the Remix Icon Project.

So what will happen in the future of Papagayo-NG?

There are a few things I’m working on.

- Cut/Copy and Paste of the Objects in the Waveformview. This should now be a bit more viable thanks to our new Tree System but there are some possible corner cases we need to handle.

- A Command Line Interface. As I’ve explained in this issue here in Issue #105 I want to add a CLI Interface to make batch processing several files easier. This might allow Papagayo-NG to be used in more bigger projects.

- And I’m also working on a possible alternative layout. Since the Text region is not the main focus, especially not anymore so much thanks to the automatic recognition of phonemes, a new layout focused more on the waveform might make sense. In a test I changed this around a bit as you can see here:

How to get updates for Papagayo-NG?

There are several ways to do that.

Of course you can hop over to Github and download the code as usual, you will need Python and some of the dependencies.

There are installers and binary packages available for some systems.

I improved the Installer for Windows and have been posting updated semi-regularly to my Patreon and you can also find the binaries in my Github Fork: Steveways Papagayo-NG Fork

You can also get AppImage Builds, currently created from Konstantin. He updated the scripts to create these as you can see here in this Issue #99.

We will likely concentrate releases to this page for Papagayo-NG.

How to support development of Papagayo-NG?

There are a few ways you can support the development of Papagayo-NG.

You can directly support me via Patreon or Gumroad.

Alternatively you can also send money via this Gumroad from Morevna, I automatically receive a cut of that.

You can also help by issuing tickets on our Github or by forking and creating Pull Requests.

If you want to work on a feature or a bug, it would be best if you can first contact me via a Ticket on Github or take a look into my Fork to see if I didn’t already fix or add that.

– @steveway

2 Responses

This looks astonishing. This sure will be fun.

The appImage for Linux does not work. It launches the program but it won’t let open any sound file type. I tried with .wav and mp3, it simply will not.